Encryption and key management as-a-service

No matter what your data footprint looks like, our platform has you covered. Fully integrated encryption technologies, ironclad key management, and compliance reports at your fingertips.

Secure all your sensitive data sources and workloads in a unified platform, delivered as a service with nothing to deploy, nothing to manage, and no specialized skills required

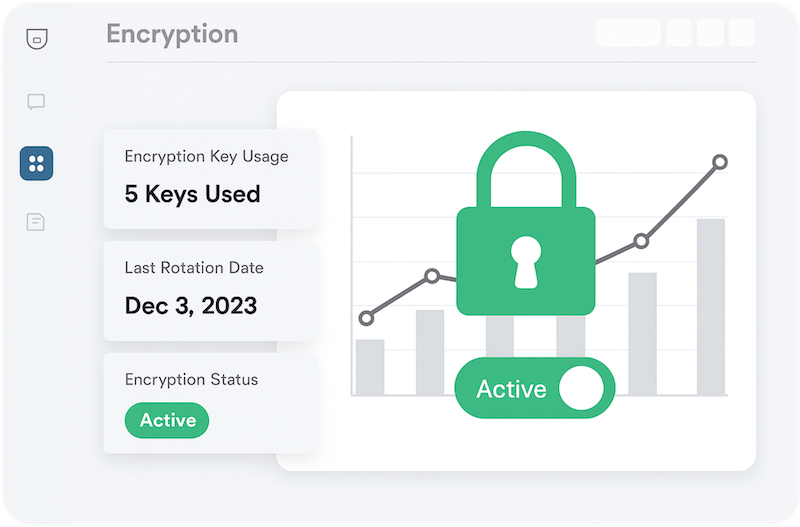

Key security is often an afterthough, but with Sidechain, you get a fully-integrated, high security key management infrastructure (KMI) delivered from the cloud

Hardware Security Modules are a necessary, but cumbersome security add. Sidechain makes achieving FIPS 140-2 Level 3 easy with our data center-based HSM hosting service

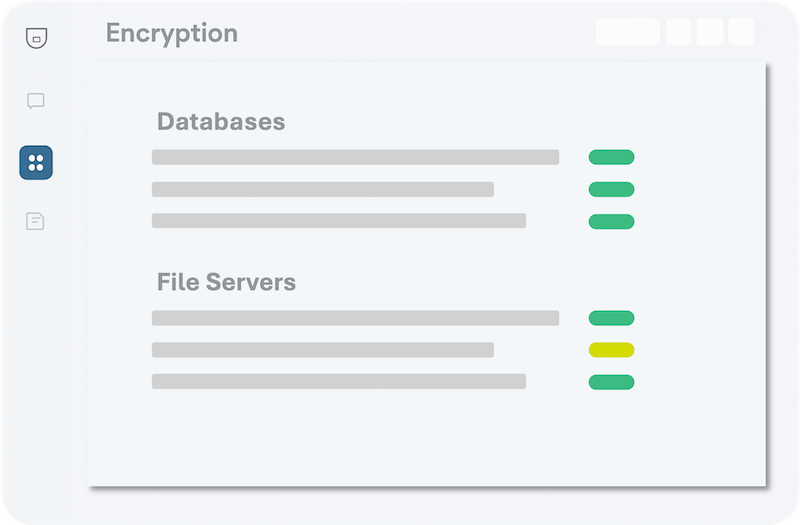

Gain a single pane of glass view into the security of every data source. Instantly know that data security is active and working for databases, file servers, web servers, cloud assets, and more.

Always be prepared for your next information security audit. On-demand compliance reports enable you to demonstrate strong encryption and key management with zero effort.

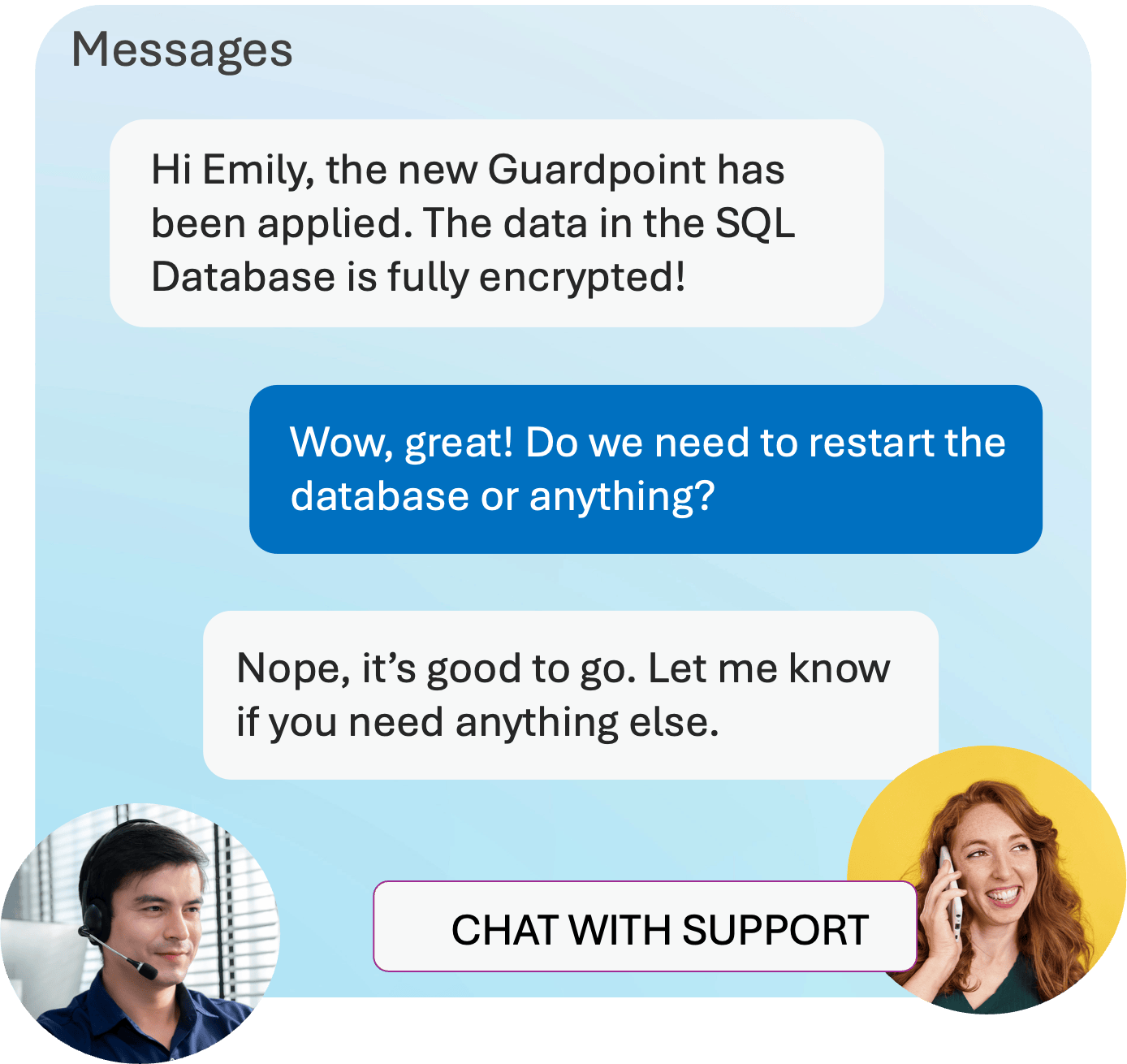

Sidechain gives you a dedicated Named Engineer so you have a direct line to your own data security experts anytime.

We remove the complicated setup, steep price, and long learning curve. Your team can deploy Sidechain data security in minutes, and provide instant value.

We offer complete managed services for the following encryption and key management vendors. Our certified engineers ensure 100% operational stability, ensuring you get the most out of your investment in these solutions

"Of the countless things on my team's plate, managing encryption infrastructure isn't a priority for us. It's pure overhead, especially since it only touches a small portion of our overall environment. But we still need it done right for compliance.

I'm happy to let Sidechain handle all of that so my engineers can focus on projects that actually move our business forward. They know encryption better than we ever will, and frankly, we don't want to become experts in something that's not our core competency."

Achieve enterprise-grade encryption and key management with nothing to deploy and ongoing support from certified engineers

Learn MoreSidechain simplifies data encryption and key management so you can protect your data, nail compliance, and move on.

Should you really be guessing your way through the protection of your most sensitive data? Turn to the experts at Sidechain.

The problem with encryption is that there are so many ways to do it. At Sidechain, we understand the trade-offs between disk encryption, database TDE, file encryption, cloud provider managed encryption, and the countless other ways to encrypt your data.

We've earned our reputation through hundreds of successful implementations and partnerships. When encryption vendors need specialists to deliver their most complex projects, they call us. When auditors review our work, they approve it. When companies need data protection they can count on, they choose focused expertise over jack-of-all-trades providers.

Our track-record of deploying and managing successful encryption and key management services ensures we can help your organization implement the data protection you deserve. We stand by our work, and guarantee a successful outcome.